Interview with Christian Hunt – Human Risk

Christian is a thought leader in behavioural science and compliance. He has 25 years of experience working in financial services. Amongst his many roles, he was the Managing Director, Head of Behavioural Science at UBS — a role created specifically for him — and is a Member of the Global Association of Applied Behavioural Scientists.

Today, Christian’s company, Human Risk, helps clients understand behavioural science to encourage genuine employee engagement with ethics & compliance. He also hosts a great podcast and has interviewed many risk managers and experts.

We spoke to Christian about how he thinks safety professionals can deploy behavioural science.

RiskPal (RP):

What is it that Human Risk does?

Christian Hunt (CH): We help people to think about human risk — the risks posed by human decision-making. I define this as “the risk of people doing things they shouldn’t, or not doing things they should”.

Human risk is the largest risk facing all organisations because when things go wrong, there’s always a human component; either causing a problem or making things worse by how they react to it. Or don’t! So, we tend to work with functions like compliance, ethics, HR or health and safety, but it’s equally relevant to other business areas. Every manager has responsibility for managing human risk on some level.

We also help organisations get the best out of their people, by working with the grain of human thinking rather than against it.

RP: Now, in your work, you borrow from various disciplines – can you explain this and why this is relevant to safety and security personnel?

CH: In simple terms, safety practitioners are trying to influence human behaviour. While this might involve changing the physical world, it’s ultimately about influencing people.

In that sense, it’s no different to advertisers who try to influence us to buy something. Or a streaming service like Netflix or a social media network like Facebook, both of whom wants to keep us on their platforms. Even the way governments set about encouraging mask-wearing or vaccinations during the pandemic was about influencing human decision-making. There are good and bad examples everywhere.

It is, therefore, likely that, when you have people out there spending collectively billions on trying to persuade us to do something or not to do something, there must be some things that we can learn from it. I shamelessly steal and borrow ideas from other contexts that I think might be relevant.

RP: You have spoken to many safety and security professionals through the podcast. What do you think are some of the lessons that they can take from behavioural science?

CH: The key is to recognise that human beings can simultaneously be brilliant and awful. We need to stop thinking about what we would like people to do and more about what people are likely to do.

For example, in theory, people ought to be interested in looking out for themselves. So, people should want to read a long set of policy instructions if it would keep them safer. Realistically, people are stressed and have many other things going on. They are unlikely to prioritise the things that we theoretically think they ought to.

Just like it is not likely that you read the entire documentation given to you when you rent a car, even though being informed about the intricacies of the agreement you’re signing would be in your best interest.

We need to recognise the realities of human existence and plan around that. We should focus less on designing processes that look good on paper but consider what actually works with real human beings in the real world.

RP: What is the best example you have witnessed of behavioural science in safety?

CH: A few years ago, London Underground decided to shake up its safety announcements at one of its stations. These are often ignored by passengers. They had children record the messages, which resonated not only because of the novelty factor but also because the human brain is tuned to pay attention to children who we know may require our help.

RP: And the worst?

CH: Well, there are loads…

But if I had to pick one, then it would be the use of unnecessary blurb that some lawyers love. For example, the warning on coffee cups that says “Caution, Hot Liquid.” It may make sense legally, but it is potentially patronising and risks diminishing health and safety.

RP: What are the main challenges facing safety and security practitioners today?

CH: Here’s a counter-intuitive one! Nowadays, we’re better at managing risk, which should make the world safer. But actually, that can make things more dangerous!

Take cars, for example – we have added so many safety features that make risk less visible or give drivers the impression that no danger exists. Ironically, whenever a safety feature like airbags or ABS is added to cars, drivers get the message that driving is safer, so there’s an increased risk of ‘risk compensation’ — taking more risk. Equally, the fact modern cars are quieter means we have less sense of how fast we are driving, which is why the authorities add extra signage and ‘rumble strips’ when we approach junctions. These help give drivers more of a sense of their driving speed.

It’s a constant game of trying to make people feel the risks they’re taking.

We can think of risk assessments in a similar way. In theory, we’re trying to make people think about the risks they’re taking so they can mitigate them. But people often understandably think of risk assessments as bureaucracy whose primary purpose is to protect the employer — not the employee. As a result, they may pay less attention to them.

All of which means that a safety officer’s job is never done! They constantly need to evolve their programmes and watch out for ‘safety fatigue’ or situations when, for example, a well-intentioned message inadvertently comes across as patronising. It’s a fine line between engaging or losing your audience!

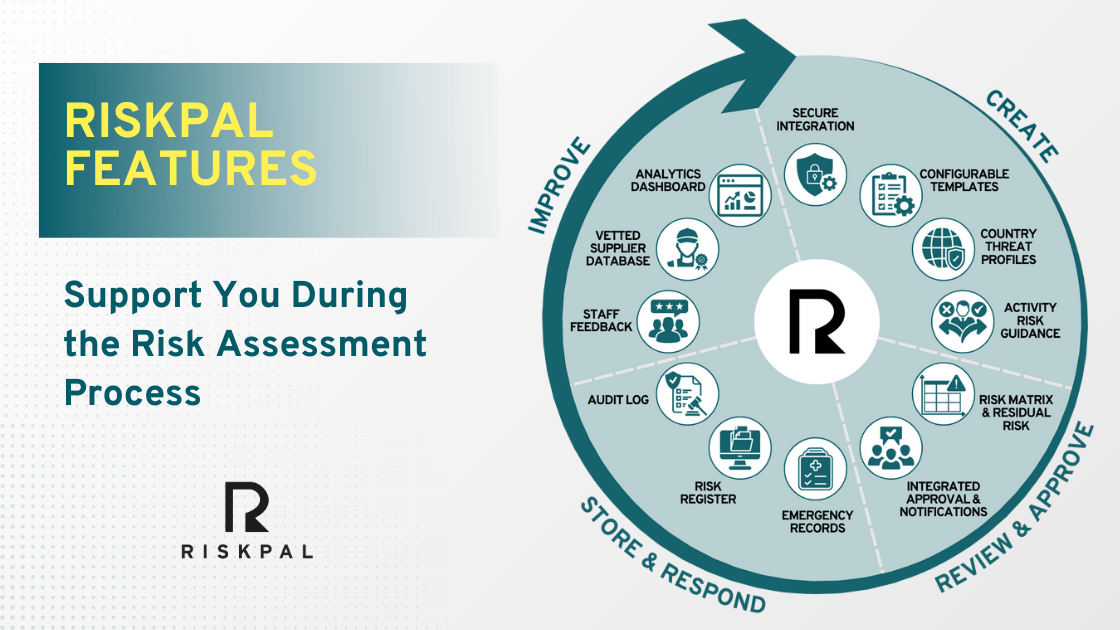

Starting to think differently about your risk assessments and safety?

Contact us to find out more about how RiskPal is transforming the risk assessment process.